Hive实战操作

Hive实战操作

AlexHive实战操作

mysql配置安装:

安装: mysql / mysql-server

1

2

3yum install -y mysql

yum install -y mysql-server

/etc/init.d/mysqld start安装Hive

1

2

3wget http://archive.apache.org/dist/hive/hive-0.13.0/apache-hive-0.13.0-bin.tar.gz

tar -zxvf apache-hive-0.13.0-bin.tar.gz

cd /usr/local/src/apache-hive-0.13.0-bin/confvi conf/hive-site.xml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>

</description>

</property>

</configuration>vi ~/.bashrc1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21### 底部加入以下配置

### java

export JAVA_HOME=/usr/local/src/jdk1.6.0_45

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib

# export PATH=$PATH:$JAVA_HOME/bin

### hadoop

export HADOOP_HOME=/usr/local/src/hadoop-1.2.1

### Hbase

export HBASE_HOME=/usr/local/src/hbase-0.98.0-hadoop1

export HBASE_CLASSPATH=$HBASE_HOME/conf

export HBASE_LOG_DIR=$HBASE_HOME/logs

### hive conf

export HIVE_HOME=/usr/local/src/apache-hive-0.13.0-bin

# export PATH=$MAHOUT_HOME/conf:$MAHOUT_HOME/bin:$ZOOKEEPER_HOME/bin:$HIVE_HOME/bin:$PATH

export PATH=$PATH:$JAVA_HOME/bin:$HIVE_HOME/bin:$PATH:$HADOOP_HOME/binsource ~/.bashrc将mysql driver 放入lib目录

1

cp mysql-connector-java-5.1.41-bin.jar /usr/local/src/apache-hive-0.13.0-bin/lib/

测试hive

1

hive

创建表

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18create EXTERNAL TABLE w_a

(

usrid STRING,

age STRING,

sex STRING

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n';

create EXTERNAL TABLE w_b

(

usrid STRING,

active STRING,

time STRING

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n'查看表

1

show tables;

查看表结构

1

desc w_a;

导入数据

- a.txt

1

2

3

4user1 27 1

user2 28 1

user3 29 0

...

- a.txt

b.txt

1

2

3

4user1 100 20170301

user3 101 20170302

user4 102 20170303

...导入命令

1

2hive -e "LOAD DATA LOCAL INPATH './a.txt' OVERWRITE INTO TABLE w_a"

hive -e "LOAD DATA LOCAL INPATH './b.txt' OVERWRITE INTO TABLE w_b"查看导入数据

1

select * from w_a;

变链接查询

1

select a.*, b.* from w_a a join w_b b on a.usrid=b.usrid;

1 | hive> select a.*, b.* from w_a a join w_b b on a.usrid=b.usrid; |

从HDFS中导入

1

LOAD DATA INPATH '/user_name.data.utf.txt' OVERWRITE INTO TABLE u_info

overwrite表示加载的数据会覆盖原来的内容

对比本地的方式:LOAD DATA LOCAL INPATH

利用

insert命令导入数据- 执行命令(例子):

1

insert into table table1 select usrid, age from w_alimit 3;

- 也可以支持动态分区插入:

1

insert into table test1 partition(c) select * from test2;

直接通过查询插入

1

create table test2 as select * from test1;

数据导出(导出为本地文件)

1 | insert overwrite local directory '/home/developer/hive_test/1.txt' select usrid, sex from w_a; |

数据导出(导出为HDFS文件)

1

insert overwrite directory '/hive_output' select * from w_b;

Partition

partition是Hive提供的一种机制:用户通过指定一个或多个partition key,决定数据存放方式,进而优化数据的查询,一个表可以指定多个partition key,每个partition在hive中以文件夹的形式存在。

创建表

1

2

3

4

5

6

7

8

9create TABLE p_t

(

usrid STRING,

age STRING

)

PARTITIONED BY (dt STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n';p1.txt

1

2

3

4user2 28 20170302

user4 30 20170302

user6 32 20170302

user8 34 20170302导入数据

1

2LOAD DATA LOCAL INPATH '/root/hive_test/p1.txt' OVERWRITE INTO TABLE p_t partition(dt='20180311');

## 去掉LOCAL 就是从hdfs导入文件

1 | hive> LOAD DATA INPATH '/root/hive_test/p1.txt' OVERWRITE INTO TABLE p_t partition(dt='20180311'); |

- 查询

1

select * from p_t where dt='20180311';

Transform

- transform功能部分可以用UDF替代,但是如果拼接的字段是根据上一次查询的结果时,UDF就不能用,UDF只能用在本行操作

- transform功能缺点是效率底了点

- vi transform.awk

1

2

3{

print $1"_"$2

}

1 | hive> add file ./transform.awk |

1 | hive> select transform(usrid,age) using "awk -f transform.awk" as (uuu) from w_a; |

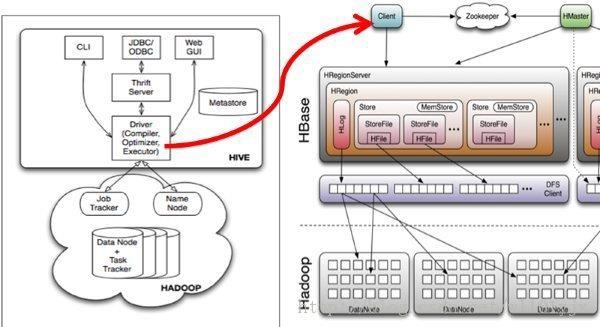

Hive整合hbase

创建Hbase表:

- create ‘classes’,’user’

加入数据:

1

2

3

4put 'classes','001','user:name','jack'

put 'classes','001','user:age','20'

put 'classes','002','user:name','liza'

put 'classes','002','user:age','18'创建Hive表并验证:

- create external table classes(id int, name string, age int)

- STORED BY ‘org.apache.hadoop.hive.hbase.HBaseStorageHandler’

- WITH SERDEPROPERTIES (“hbase.columns.mapping” = “:key,user:name,user:age”)

- TBLPROPERTIES(“hbase.table.name” = “classes”);

再添加数据到Hbase:

- put ‘classes’,’003’,’user:age’,’1820183291839132’